Introduction

As organizations accelerate AI adoption, the mandate for 2026 is clear: automate more decisions, deploy more AI-driven workflows, and move faster than ever before.

However, AI strategies rarely fail because of technology limitations. They fail because businesses are not designed for how AI actually operates at scale. The most serious issues don’t appear in pilots or proofs of concept—they surface when AI systems interact with live data, real customers, operational constraints, and cost pressures.

Below are six critical areas where AI strategies are most likely to break under real-world conditions.

1. The Data Foundation Gap

The Error

Deploying AI agents on top of fragmented, outdated, or unverified data sources.

The Failure

Context blindness. AI agents can only reason over the data they can access. When data is spread across disconnected systems, decisions are made using incomplete or inaccurate context.

Scenario

An AI agent approves a premium offer based on CRM data showing a high-value customer. It has no visibility into unresolved billing issues or compliance flags stored in separate systems, nor awareness that the CRM data hasn’t been updated in years.

Reality Check

AI does not correct data issues—it amplifies them. Before scaling AI-driven actions, organizations must unify, validate, and govern their data foundations.

2. The Governance Void

The Error

Prioritizing AI capabilities over auditability, transparency, and operational control.

The Failure

Black-box liability. When AI decisions cannot be explained or audited, organizations lose control over compliance, budgets, and customer trust. In 2026, “the AI decided” is not an acceptable explanation.

Scenario

- An automated decision denies a request without traceability

- A support bot issues an unauthorized discount

- An AI-driven process violates internal policy with no clear accountability

Reality Check

AI governance is an operational requirement. It demands least-privilege access, human-in-the-loop approvals for high-impact actions, and continuous monitoring of AI behavior.

3. The Utility Gap (The 15-Second Rule)

The Error

Assuming conversational AI is always superior to existing point-and-click workflows.

The Failure

Users reject tools that increase time-to-completion. If AI introduces additional steps, typing, or waiting, adoption quickly declines.

Scenario

A task that once required a single click now requires a conversational command and response delay, slowing productivity rather than improving it.

Reality Check

Efficiency is measured by time saved, not intelligence added. If AI cannot outperform a button or a rule, it should not replace it.

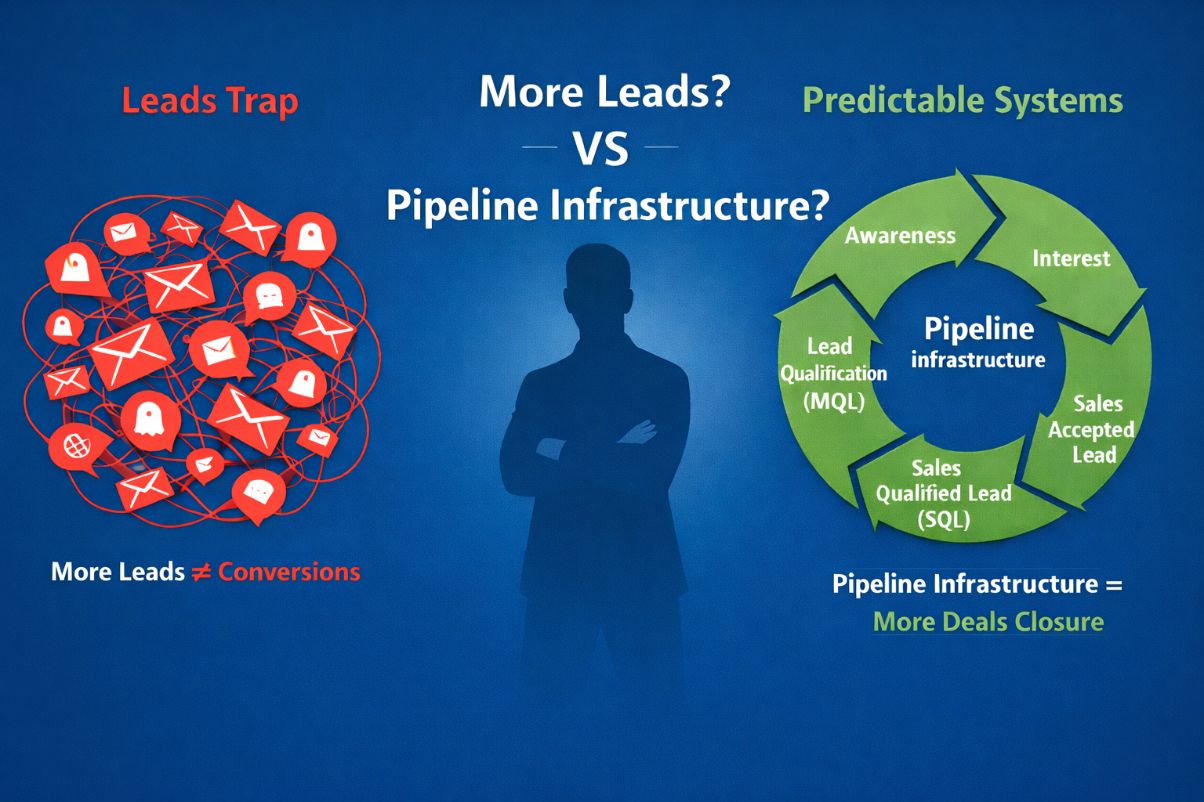

4. The Strategic Restraint Void (When NOT to Automate)

Strategic Error

Assuming that because AI can be applied, it should be applied.

The Failure

Organizations invest in low-impact AI use cases that consume budget without delivering meaningful business value.

Scenario

AI is deployed to automate rigid, linear processes like monthly invoicing, while high-impact decision-making tasks—such as lead qualification or exception handling—remain manual.

Reality Check

AI agents should be reserved for workflows that require judgment and reasoning. Linear processes are better handled by scripts or traditional automation.

5. When AI Overwhelms Your Systems

The Error

Running machine-speed AI agents on infrastructure designed for human-speed interaction.

The Failure

API saturation, degraded performance, and unexpected cost spikes caused by excessive system requests.

Scenario

An AI-powered support agent queries backend systems for every interaction, generating tens of thousands of API calls per hour and impacting system performance across the organization.

Reality Check

AI agents generate exponentially more requests than humans. Infrastructure must be tested and optimized before AI is deployed at scale.

6. The Economic Misalignment

The Error

Defaulting to autonomous AI agents for all tasks without cost-benefit analysis.

The Failure

Using premium AI reasoning for simple tasks erodes margins and undermines ROI.

Scenario

An AI agent reasons through a basic data lookup that could be handled by a lightweight script at a fraction of the cost.

Reality Check

Tasks must be routed to the most cost-effective solution:

- Low-complexity, high-volume: scripts or embedded automation

- High-complexity, low-volume: autonomous AI agents

What This Means for 2026

AI success in 2026 will be defined by stability, governance, and scalability—not by the number of AI tools deployed. Organizations that succeed will design AI as part of their core architecture, not as a layer added to broken systems.

Ready to Transform?

Meet with an expert and start your journey today.